- TRANSDUCERS

- TRANSDUCERS

- BASIC COMPONENTS DK

- BASIC COMPONENTS DK

- MARKETPLACE

- MARKETPLACE

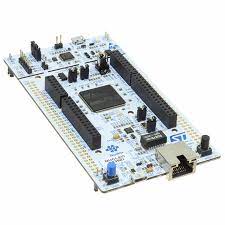

- DEVELOPMENT BOARDS & KITS

- DEVELOPMENT BOARDS & KITS

- CABLE ASSEMBLIES

- CABLE ASSEMBLIES

- RF AND WIRELESS

- RF AND WIRELESS

- BOXES ENCLOSURES RACKS

- BOXES ENCLOSURES RACKS

- AUDIO PRODUCTS

- AUDIO PRODUCTS

- FANS-BLOWERS-THERMAL MANAGEMENT

- FANS-BLOWERS-THERMAL MANAGEMENT

- WIRELESS MODULES

- WIRELESS MODULES

- TERMINALS

- TERMINALS

- Cables/Wires

- Cables/Wires

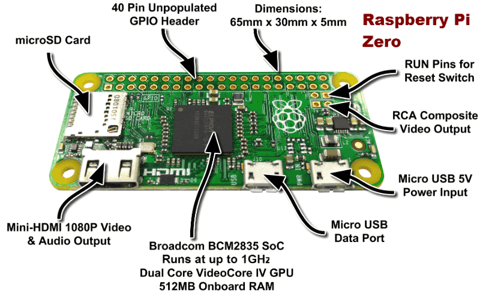

- SINGLE BOARD COMPUTER

- SINGLE BOARD COMPUTER

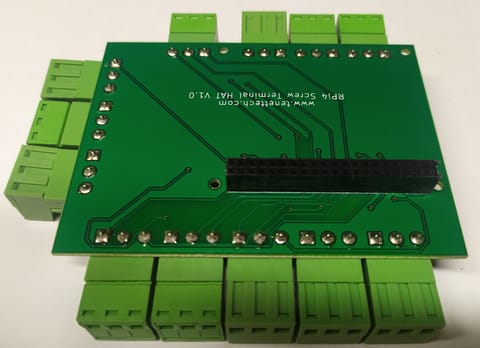

- BREAKOUT BOARDS

- BREAKOUT BOARDS

- LED

- LED

- TEST AND MEASUREMENT

- TEST AND MEASUREMENT

- DEVELOPMENT BOARDS AND IC's

- DEVELOPMENT BOARDS AND IC's

- EMBEDDED COMPUTERS

- EMBEDDED COMPUTERS

- OPTOELECTRONICS

- OPTOELECTRONICS

- INDUSTRAL AUTOMATION AND CONTROL

- INDUSTRAL AUTOMATION AND CONTROL

- COMPUTER EQUIPMENT

- COMPUTER EQUIPMENT

- CONNECTORS & INTERCONNECTS

- CONNECTORS & INTERCONNECTS

- MAKER/DIY EDUCATIONAL

- MAKER/DIY EDUCATIONAL

- TOOLS

- TOOLS

- MOTORS/ACTUATORS/SOLEENOIDS/DRIVERS

- MOTORS/ACTUATORS/SOLEENOIDS/DRIVERS

- FPGA HARDWARE

- FPGA HARDWARE

- ROBOTICS & AUTOMATION

- ROBOTICS & AUTOMATION

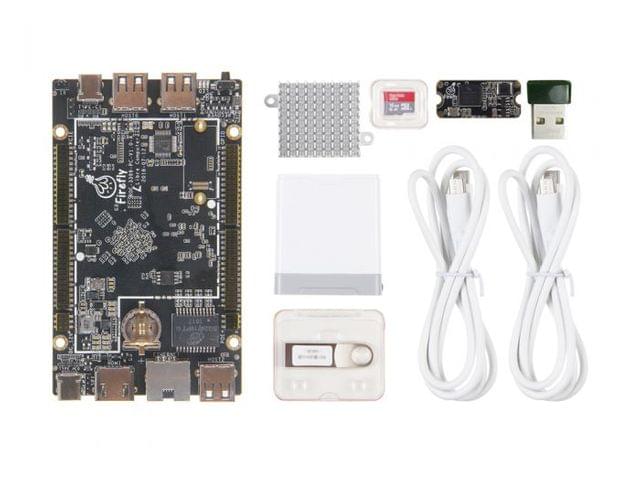

Based on the AI-specific APiM framework, a modular deep neural network learning accelerator without any externalcaching can be used for high-performance edge computing, as a vision-based deep learning computing and AI algorithmacceleration. NCC S1 is small in shape, extremely low in power consumption and best peak performance. Equipped withcomplete and easy-to-use model training tools, network training model instances, and professional hardware platform, itcan be quickly applied in the artificial intelligence industry.

Tops Best Peak Performance

Based on the AI-embedded Neural Network Processor (NPU), the NCC S1 possesses 28,000 parallel neural computing coresand supports on-chip parallel and in-situ calculations. Its peak up to 5.6Tops, dozens of times higher than othersolutions on the market. It can afford complex high-density calculations for high-performance edge computing field.

AI Processing Framework APiM

Based on AI-specific MPE matrix engine and APiM (AI processing in Memory) framework, it deals with AI in arevolutionary way. Without any instructions, bus and external DDR cache, plenty of data can be directly input or outputto the silicon chip by upgrading the network preloading once, which greatly lifts the processing speed of AI andreduces the processing energy consumption.

Tops/W High Energy Efficiency

The NPU of NCC S1 neural network computing card uses the 28nm process technology. The power is only 300mW whenthroughput is 2.8 Tops, while the energy efficiency is up to 9.3 Tops/W. It maintains strong computing ability whileowning extremely low energy consumption, endowed with great advantages in the edge computing field of terminalequipment.

High-performance Hardware Platform

NCC S1 neural network computing card can be equipped with ROC-RK3399-PC open source main board. On condition that it isstocked with high-performance RK3399 six-core processor and abundant hardware interface, it can rapidly integratehardware platform for edge computing, set up product prototype, and thus accelerate AI product project process.

Supporting Model Training Tools

Provide the complete and easy-to-use model training tool PLAI (People Learn AI) which based on PyTorch, it can bedeveloped on Windows 10 and Ubuntu 16.04 systems to add custom network models more easily and quickly, which greatlyreduces the technical difficulties to applying AI and makes AI technology accessible to more people.

Provide Network Training Model

Support the following three network training model examples such as GNet1, GNet18 and GNetfc with more networkinstances continuing to emerge subsequently, making it possible to easily test a large number of deep learningapplications on the device.

| Size | 27.5x12.5x3.5mm |

| NPU | Lightspeeur SPR2801S (28nm process, unique MPE and APiM architecture) |

| Peak | 5.6 TOPs@100MHz |

| Low Power | 2.8 TOPs@300mW |

| Platform | Applicable ROC-RK3399-PC platform |

| Framework | Support Pytorch, Caffe framework, follow-up support TensorFlow |

| Tools | PLAI model training tool(Support for GG1, GNet18 and GNetfc network models based on VGG-16) Support Ubuntu, Windows operating system |

- Home

- SINGLE BOARD COMPUTER

- NCC S1 Neural Network Computing Card - AI Package

NCC S1 Neural Network Computing Card - AI Package

SIZE GUIDE

- Shipping in 10-12 Working days

- http://cdn.storehippo.com/s/59c9e4669bd3e7c70c5f5e6c/ms.products/5d4a9b864e9f183184030890/images/5d4a9b864e9f183184030892/5d4a9a8e1327a70c547abe6d/5d4a9a8e1327a70c547abe6d.png

Description of product

Based on the AI-specific APiM framework, a modular deep neural network learning accelerator without any externalcaching can be used for high-performance edge computing, as a vision-based deep learning computing and AI algorithmacceleration. NCC S1 is small in shape, extremely low in power consumption and best peak performance. Equipped withcomplete and easy-to-use model training tools, network training model instances, and professional hardware platform, itcan be quickly applied in the artificial intelligence industry.

Tops Best Peak Performance

Based on the AI-embedded Neural Network Processor (NPU), the NCC S1 possesses 28,000 parallel neural computing coresand supports on-chip parallel and in-situ calculations. Its peak up to 5.6Tops, dozens of times higher than othersolutions on the market. It can afford complex high-density calculations for high-performance edge computing field.

AI Processing Framework APiM

Based on AI-specific MPE matrix engine and APiM (AI processing in Memory) framework, it deals with AI in arevolutionary way. Without any instructions, bus and external DDR cache, plenty of data can be directly input or outputto the silicon chip by upgrading the network preloading once, which greatly lifts the processing speed of AI andreduces the processing energy consumption.

Tops/W High Energy Efficiency

The NPU of NCC S1 neural network computing card uses the 28nm process technology. The power is only 300mW whenthroughput is 2.8 Tops, while the energy efficiency is up to 9.3 Tops/W. It maintains strong computing ability whileowning extremely low energy consumption, endowed with great advantages in the edge computing field of terminalequipment.

High-performance Hardware Platform

NCC S1 neural network computing card can be equipped with ROC-RK3399-PC open source main board. On condition that it isstocked with high-performance RK3399 six-core processor and abundant hardware interface, it can rapidly integratehardware platform for edge computing, set up product prototype, and thus accelerate AI product project process.

Supporting Model Training Tools

Provide the complete and easy-to-use model training tool PLAI (People Learn AI) which based on PyTorch, it can bedeveloped on Windows 10 and Ubuntu 16.04 systems to add custom network models more easily and quickly, which greatlyreduces the technical difficulties to applying AI and makes AI technology accessible to more people.

Provide Network Training Model

Support the following three network training model examples such as GNet1, GNet18 and GNetfc with more networkinstances continuing to emerge subsequently, making it possible to easily test a large number of deep learningapplications on the device.

| Size | 27.5x12.5x3.5mm |

| NPU | Lightspeeur SPR2801S (28nm process, unique MPE and APiM architecture) |

| Peak | 5.6 TOPs@100MHz |

| Low Power | 2.8 TOPs@300mW |

| Platform | Applicable ROC-RK3399-PC platform |

| Framework | Support Pytorch, Caffe framework, follow-up support TensorFlow |

| Tools | PLAI model training tool(Support for GG1, GNet18 and GNetfc network models based on VGG-16) Support Ubuntu, Windows operating system |

NEWSLETTER

Subscribe to get Email Updates!

Thanks for subscribe.

Your response has been recorded.

INFORMATION

ACCOUNT

ADDRESS

Tenet Technetronics# 2514/U, 7th 'A' Main Road, Opp. to BBMP Swimming Pool, Hampinagar, Vijayanagar 2nd Stage.

Bangalore

Karnataka - 560104

IN

Tenet Technetronics focuses on “Simplifying Technology for Life” and has been striving to deliver the same from the day of its inception since 2007. Founded by young set of graduates with guidance from ardent professionals and academicians the company focuses on delivering high quality products to its customers at the right cost considering the support and lifelong engagement with customers. “We don’t believe in a sell and forget model “and concentrate and building relationships with customers that accelerates, enhances as well as provides excellence in their next exciting project.